Introduction to Quantitative Methods

Instructor: Elisabeth D. RootImportant notes on Time series analysis Slides:http://www.colorado.edu/geography/class_homepages/geog_4023_s11/lectures.html

Since “noise” is not understandable, all the useful information is in the trend, seasonality, etc.

-Construct a series from simple assumptions about each of the individual components

Three typical steps in the “reduction-to-noise'' process:-A data transformation such as taking logarithms of the data

-Removing seasonality and trend to obtain a stationary process.

-Fit a standard time series model

The “reduction-to-noise” procedure does not always proceed in a linear fashion

-One will usually jump around from one attempt after another of trying to develop each of the three components

Steps in a classical time series analysis1.Do a time plot of the time series

2.Describe the variability of the series seen in the plot:

-Is there a trend? Is the trend in mean and variance? Or only one of them?

-Is there a seasonal pattern? What is the period?

-Is there any additional irregular variability?

3.Use time series plots to determine whether transformations are necessary

4.Transform the data if necessary

-Log or square root transforms

5.Use time plots and test statistics to determine if the series is stationary (constant mean and or variance)

6.Make the series stationary if it is not

7.Fit TS model to series and analyze residuals

8.When a good model is found, forecast the future

Terminology-Dependence: Correlation of observations of one variable at one point in time with observations of the same variable at prior points in time

Serial correlation or autocorrelation

-Stationarity: The mean value of the series remains constant over the time series (e.g., no systematic change in the mean, no trend)

Also, variance should remain constant

-Differencing: data pre-processing step which de-trends the data to achieve stationarity

Subtract each data point in a series from it’s predecessor

Most methods in TS analysis are concerned with stationary time series

-Specification: using diagnostic tests, specifying the type of time series model to apply to the series

Auto-regressive (AR), Moving average (MA), ARMA (combined) or ARIMA (combined integrated)

Also could have non-linear models

A trend is a long term change in the mean and/or variance of the series

e.g., if you computed the mean of the series at several different intervals, the mean would be different in each

If the trend is not immediately apparent (usually due to a large error component) we can identify it using a smoothing process

Once we have identified the trend we can model it: Fit a linear regression model to the data

We can remove a trend through a process called differencing

-Fit a linear/quadratic/polynomial function to the trend and subtract the fitted values from each observation

-Subtract each observation from it’s neighbor (Xt-Xt-1)

Often the whole point of modeling a trend is to create a residual series that is used for time series analysis

The simplest trend model for a linear trend

Xt = alpha + beta t + white noise

alpha + beta t = mean of the series at time t

If alpha and beta are assumed constants, the trend is called deterministic

If alpha and beta are assumed random, then the trend is stochastic

Once we have found a trend model, we can use the model to predict future values, or to detrendthe data

Detrended data = Xt-fitted trend (residuals)

Variation (increase or decrease in the series) that is annual in period

In most cases, we estimate the seasonality not to use it to put in the model used for forecasting, but simply to remove it

A logarithmic transformation will convert the series to additive seasonality:Smooth the series

Once we detrend and remove seasonality, all that is left is the random or error component

To make a series X stationary

1.Check if there is variance that changes with time

-Make variance constant with log or square root transformation

-Call the transformed data X*

2.Remove the trend in mean with regular differencing or fitting a trend line

-Call the new series X**

-The correlogram of X** should only have a few significant spikes at small lags

3.If there is a seasonal cycle left in the data, we must seasonally difference the series too

-Call the new series X***

Data:

year,month,mean,interp

1958,3,315.71,315.71

.

.

.

2010,1,388.63,388.63

mloa<-read.table("http://www.colorado.edu/geography/class_homepages/geog_4023_s11/monaloa.txt", header=T, sep=",")

names(mloa) #shows the column names

mlco2<-ts(mloa$interp, st=c(1958,3), end=c(2010,1), fr=12)

plot(mlco2, ylab="Mean CO2 (PPM)")

ts.plot(mloa$interp, ylab="Mean CO2 (PPM)")

One way to look at seasonality

> boxplot(mlco2~cycle(mlco2))

Classical decomposition

mlco2.dec<-decompose(mlco2, type="mult")

plot(mlco2.dec)

Original AirPassenger series is a non-stationary variance (step 1 of making series stationary)

library("fpp")

logAP<-log(AirPassengers)

plot(logAP, ylab="Air Passengers (1000s)")

transforming it using logarithmic function. Notice the Y Axis in both plots

What is a stationary time series?

“…a time series is said to be stationary if there is no systematic change in mean (no trend), if there is no

systematic change in variance and if strictly periodic variations have been removed.” (Chatfield: 13)

Correlogram has very few significant spikes at very small lags and cuts off drastically/dies down quickly (at 2 or 3 lags)

describe the “quality” of nonstationarity of a series

Stochastic = inexplicable changes in direction

-Often found in economic processes, sometimes climate

-“Random walk” process

*Use differencing and autoregressive models

Deterministic = plausible physical explanation for a trend or seasonal cycle

-Increase in population, orbit of the earth

*Use regression models

Checking for stationarity

logAP<-log(AirPassengers)

kpss.test(logAP, null = "Trend")

kpss.test(AirPassengers, null = "Trend")

adf.test(logAP, alternative = "stationary")adf.test(AirPassengers, alternative = "stationary")

1 > 2 No 1-2 = -1 if negative NO

2 > 1 Yes 2-1 = 1 if positive YES

KPSS: 0.01 > 0.05 No Can Reject means series non-stationary

ADF: 0.8 > 0.05 Yes Cannot Reject Means series is non-stationary

KPSS and ADF are use opposite hypothesis

Example:

> kpss.test(logAP, null = "Trend")

KPSS Test for Trend Stationarity

data: logAP

KPSS Trend = 0.121, Truncation lag parameter = 2,

p-value = 0.09626is 0.09626 > 0.05

0.09626 - 0.05 = 0.04626

Yes means series is stationary

Smoothing is often used to remove an underlying signal or trend (such as a seasonal cycle)

Common method is the centered moving average

> acf(gwn, main = "ACF")

> qqnorm(gwn)

> pacf(gwn, main = "PACF")

>tsdisplay(z.ts) #provides a time plot along with an ACF and PACF for a TS object

Autoregressive (AR) and moving average (MA)

The AR model includes lagged terms of the time series itself

The MA model includes lagged terms on the noise or residuals

How do we decide which to use?

?ACF and PACF

The autocorrelation function (ACF) is a set of correlation coefficients between the series and lags of itself over time

The partial autocorrelation function (PACF) is the partial correlation coefficients between the series and lags of itself over time

If the PACF displays a sharp cutoff while the ACF decays more slowly (i.e., has significant spikes at higher lags), we say that the series displays an

"AR signature“NOTE:*The lag at which the PACF cuts off is the indicated number of AR terms

In Simple terms

> library("fpp")

> livestock

> tsdisplay(livestock)if the ACF is gradually decreasing over time

AND

PACF suddenly drops or goes up this is an AR signature

The diagnostic patterns of ACF and PACF for an AR(1) model are:

-ACF: declines in geometric progression from its highest value at lag 1

-PACF: cuts off abruptly after lag 1

The opposite types of patterns apply to an MA(1) process:

-ACF: cuts off abruptly after lag 1

-PACF: declines in geometric progression from its highest value at lag 1

For the ARMA(1,1), both the ACF and the PACF exponentially decrease

Much of fitting ARMA models is guess work and trial-anderror!

In most cases, the best model turns out a model that uses either only AR terms or only MA terms

ARIMA ModelingWhat is an ARIMA model?

Type of ARMA model that can be used with some kinds of non-stationary data

-Useful for series with stochastic trends

-First order or “simple” differencing

-Series with deterministic trends should be differenced first then an ARMA model applied

The “I” in ARIMA stands for integrated, which basically means you’re differencing

R Code for choosing the best ARIMA model

> get.best.arima <- function(x.ts, maxord = c(1,1,1))

{

best.aic <- 1e8

n <- length(x.ts)

for (p in 0:maxord[1]) for(d in 0:maxord[2]) for(q in 0:maxord[3])

{

fit <- arima(x.ts, order = c(p,d,q))

fit.aic <- -2 * fit$loglik + (log(n) + 1) * length(fit$coef)

if (fit.aic < best.aic)

{

best.aic <- fit.aic

best.fit <- fit

best.model <- c(p,d,q)

}}

list(best.aic, best.fit, best.model)

}

then enter the following into R

>

get.best.arima(TS, maxord=c(2,2,2))See the result for the best ARIMA fit

When fitting ARIMA models with R, an intercept term is NOT included in the model if there is any differencing

gas<-read.table("http://www.colorado.edu/geography/class_homepages/geog_4023_s11/gas.dat", header=F, sep=",")

plot(gas, xlim=c(1973,1991))

nobs=length(gas)

gas.pred <- predict(gas.arima, n.ahead=36, newxreg=(nobs+1):(nobs+36))

lines(exp(gas.pred$pred), col="red")

Detecting the trend with an ARIMA model is implicit

-Can’t calculate the exact slope of the trend line

-If the autocorrelation at the seasonal period is positive, consider adding an SAR term to the model

-If the autocorrelation at the seasonal period is negative, consider adding an SMA term to the model

Cross-CorrelationHow can we study the relationship between 2 or more time series?

The cross correlation function (CCF) is helpful for identifying lags of the x-variable that might be useful predictors of yt

*When one or more xt+h are predictors of yt , and h (the sig. lag) is negative, is sometimes said that x leads y

*When one or more xt+h are predictors yt, and h is positive, it is sometimes said that x lags y

In some problems, the goal may be to identify which variable is leading and which is lagging

We will want to use values of the x-variable to predict future values of y

ccf(x-variable name, y-variable name)

If you wish to specify how many lags to show, add that number as an argument of the command

ccf(x,y, 50)

-----------------------------------------------------

STL is a very versatile and robust method for decomposing time series. STL is an acronym for “Seasonal and Trend

decomposition using Loess”, while Loess is a method for estimating nonlinear relationships.

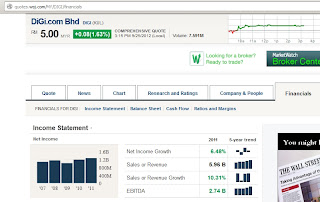

SUMMARYgld = read.csv("http://ichart.finance.yahoo.com/table.csv?s=6947.KL&ignore=.csv", stringsAsFactors=F)

z.ts = ts(gld$Adj.Close, st=2009)

tstkr <- ts(as.numeric(z.ts), deltat=1/12) #convert to univariate series

library("tseries")

fit <- stl(tstkr, t.window=15, s.window="periodic", robust=TRUE)

library("forecast")

fcast <- forecast(fit, method="naive")

plot(fcast, ylab="New orders index")

.png)

.png)

.png)